| name | about | labels |

|---|---|---|

| Bug Report | Use this template for reporting a bug | kind/bug |

910B环境,transformer网络训练失败,报addn算子相关错误

Ascend/GPU/CPU) / 硬件环境:Please delete the backend not involved / 请删除不涉及的后端:

/device ascend

Software Environment / 软件环境 (Mandatory / 必填):

-- MindSpore version (e.g., 1.7.0.Bxxx) :

-- Python version (e.g., Python 3.7.5) :

-- OS platform and distribution (e.g., Linux Ubuntu 16.04):

-- GCC/Compiler version (if compiled from source):

commit_id = '[sha1]:a87635b6,[branch]:(HEAD,origin/master,origin/HEAD,master)'

runpkg_version:Milan_C17/20240414

Excute Mode / 执行模式 (Mandatory / 必填)(PyNative/Graph):

Please delete the mode not involved / 请删除不涉及的模式:

/mode pynative

test_ms_pynative_memory_optimization_transformer_normal_1p_0001

1、取mindspore model_zoo中网络transformer,设置网络训练模式,

2、将默认的图模式替换成为pynative模式, 采用默认内存接口来设置

3、训练网络,看是否有预期结果

用例执行步骤:

source /home/miniconda3/bin/activate feature_39

export TRAIN_MODE=GRAPH_MODE

export DEVICE_TYPE=Ascend910B_Arm

export ENV_DEVICE=0

source solution_test/env_set.source -e ascend

cd solution_test/cases/01frame_func/02pynative/memory_optimization

pytest -s test_ms_pynative_memory_optimization_transformer_normal_1p_0001.py

网络训练正常,用例pass

报错信息:

Traceback (most recent call last):

File "/data/jenkins_workspace/TDT_deployment/solution_test/cases/01frame_func/02pynative/memory_optimization/test_ms_pynative_memory_optimization_transformer_normal_1p_0001/run_standalone_train/train.py", line 209, in

run_transformer_train()

File "/data/jenkins_workspace/TDT_deployment/solution_test/cases/01frame_func/02pynative/memory_optimization/test_ms_pynative_memory_optimization_transformer_normal_1p_0001/run_standalone_train/src/model_utils/moxing_adapter.py", line 108, in wrapped_func

run_func(*args, **kwargs)

File "/data/jenkins_workspace/TDT_deployment/solution_test/cases/01frame_func/02pynative/memory_optimization/test_ms_pynative_memory_optimization_transformer_normal_1p_0001/run_standalone_train/train.py", line 205, in run_transformer_train

model.train(2, dataset, callbacks=callbacks, dataset_sink_mode=False)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/train/model.py", line 1082, in train

self._train(epoch,

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/train/model.py", line 115, in wrapper

func(self, *args, **kwargs)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/train/model.py", line 630, in _train

self._train_process(epoch, train_dataset, list_callback, cb_params, initial_epoch, valid_infos)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/train/model.py", line 932, in _train_process

outputs = self._train_network(*next_element)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 697, in call

out = self.compile_and_run(*args, **kwargs)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 1018, in compile_and_run

return _cell_graph_executor(self, *new_args, phase=self.phase)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/common/api.py", line 1672, in call

return self.run(obj, *args, phase=phase)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/common/api.py", line 1711, in run

return self._exec_pip(obj, *args, phase=phase_real)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/common/api.py", line 132, in wrapper

results = fn(*arg, **kwargs)

File "/home/miniconda3/envs/feature_39/lib/python3.9/site-packages/mindspore/common/api.py", line 1691, in _exec_pip

return self._graph_executor(args, phase)

RuntimeError: Exec graph failed

E39999: Inner Error!

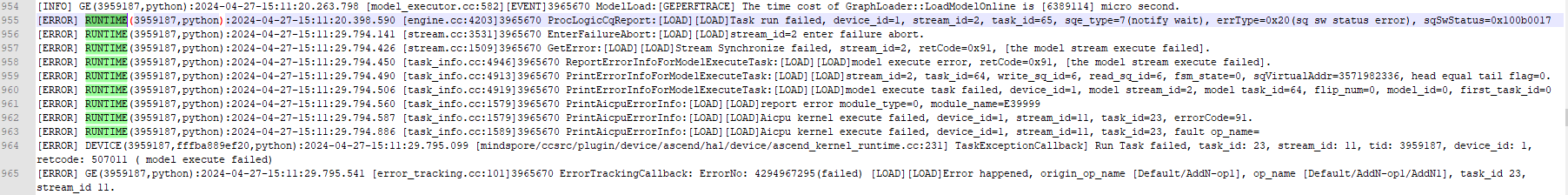

E39999: 2024-04-27-15:11:29.794.740 Aicpu kernel execute failed, device_id=1, stream_id=11, task_id=23, errorCode=91.[FUNC:PrintAicpuErrorInfo][FILE:task_info.cc][LINE:1579]

TraceBack (most recent call last):

Op execute failed. origin_op_name [Default/AddN-op1], op_name [Default/AddN-op1/AddN1], error_info: task_id 23, stream_id 11, tid 3959187, device_id 1, retcode 0x7bc83[FUNC:ErrorTrackingCallback][FILE:error_tracking.cc][LINE:105]

Aicpu kernel execute failed, device_id=1, stream_id=11, task_id=23, fault op_name=[FUNC:GetError][FILE:stream.cc][LINE:1512]

rtStreamSynchronizeWithTimeout execute failed, reason=[the model stream execute failed][FUNC:FuncErrorReason][FILE:error_message_manage.cc][LINE:53]

Assert ((rt_ret) == 0) failed[FUNC:DoRtStreamSyncWithTimeout][FILE:utils.cc][LINE:52]

Failed to execute rt v2 model for graph kernel_graph0_1, model_id 1.[FUNC:ExecuteWithStreamAsync][FILE:hybrid_model_rt_v2_executor.cc][LINE:791]

GraphManager RunGrapWithStreamhAsync failed,session id = 0, graph id = 1, stream = 0x24978b60.[FUNC:RunGraphWithStreamAsync][FILE:inner_session.cc][LINE:513]

[Run][Graph]Run graph with stream asyn failed, error code = 1343225857, session id = 0,graph id = 1, stream = 0x24978b60.[FUNC:RunGraphWithStreamAsync][FILE:ge_api.cc][LINE:800]

走给 王睿

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

感谢您的提问,您可以评论//mindspore-assistant更快获取帮助:

目前没910B机器复现,从测试提供的日志信息定位:

第一个ERROR日志报错在RUNTIME,正在联系海思同事协助定位。

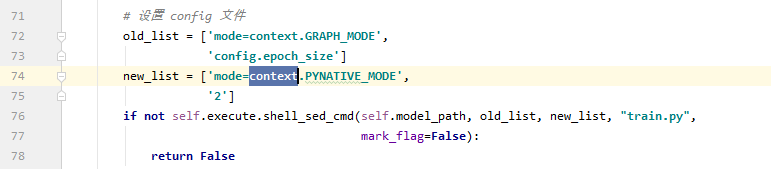

pynative模式下当前用例可以跑通,图模式下存在问题。

用例当前强制将模式设置成PYNATIVE模式,但由于用例中切换模式失败,导致跑到图模式下的流程中。

测试@慕冬蕊请假了,待与她对齐当前用例需要验证什么场景。

在图模式下开了export GRAPH_OP_RUN=1,用例可以跑通。

pynative没有问题

kbk没有问题

GE在后端报错

需要GE后端同事协助定位。

经讨论,初步判断是海思算子处理流程问题。计划节后第一时间联系海思负责人分析定位。

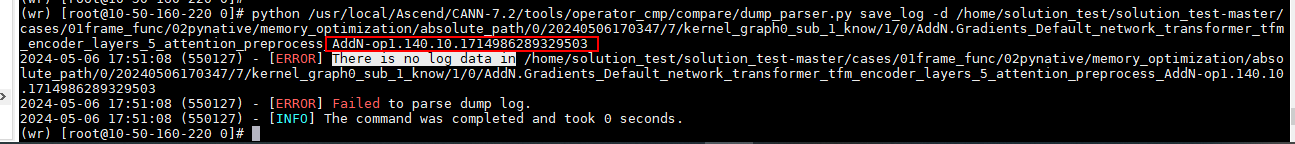

目前定界到是自定义aicpu算子执行失败,正在dump数据定位。

根据 自定义aicpu算子日志落盘

https://wiki.huawei.com/domains/21427/wiki/40193/WIKI202305091134974?title=%E7%A1%AE%E5%AE%9A%E6%98%AF%E5%90%A6%E8%B0%83%E7%94%A8AICPU%E7%AE%97%E5%AD%90

仅dump获取到的自定义aicpu算子日志没有够定位问题,需要自己加日志编译包来定位。

dump得到的文件显示没有log data,正在咨询算子组同事@潘智辉。

已与测试 @慕冬蕊 @文理 对齐,当前用例只验证pynative模式。

由于测试用例切换模式强制字符串匹配未成功,导致跑到图模式失败,需要适配用例。

经过验证,pynative模式该用例没有问题,可以跑通。

回归版本:

commit_id = '[sha1]:d8802c69,[branch]:(HEAD,origin/master,origin/HEAD,master)'

runpkg_version:Milan_C17/20240414

回归步骤:参考issue复现步骤

基本功能:适配用例后,跑测正常

INFO 2024-05-07 12:06:12 - test_ms_pynative_memory_optimization_transformer_normal_1p_0001 - base.py:teardown:140 - The base teardown is running

=== 1 passed, 4 warnings in 234.04s (0:03:54) ====

测试结论:回归通过

Sign in to comment